Meta is in ongoing discussions with Google to use its Tensor Processing Units (TPUs).

About Tensor Processing Units

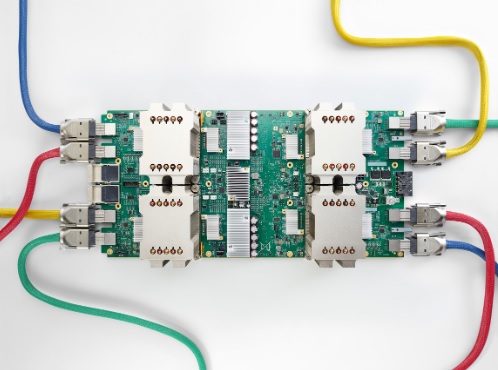

- A TPU is a specialized chip designed to accelerate AI and machine learning (ML) tasks.

- Unlike traditional computer processors (CPUs) or graphics processing units (GPUs), TPUs are specifically designed to handle the complex calculations required for deep learning models.

- TPUs were developed by Google in 2016 to improve the performance of its AI applications, such as Google Search, Google Translate, and Google Photos.

- Since then, TPUs have become a critical component in AI infrastructure and are widely used in data centers and cloud computing.

TPUs Working of

- AI models rely on a type of mathematical operation called tensor computation.

- A tensor is a multidimensional array of numbers that resembles a table of data.

- Deep learning models use these tensors to process large amounts of information and make predictions.

- TPUs are optimized for tensor computations, allowing them to process large datasets much faster than CPUs or GPUs.

Means of faster processing

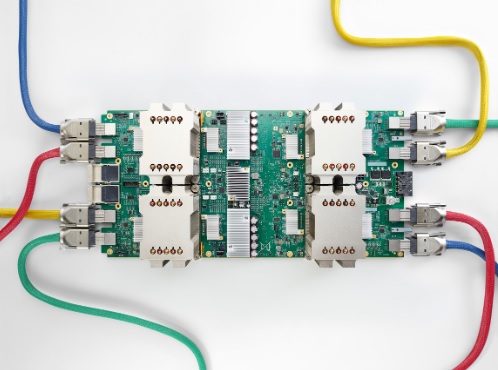

- Massive parallelism: TPUs can perform multiple calculations simultaneously, making them highly efficient.

- Low power consumption: TPUs use less energy while delivering higher performance than GPUs.

- Specialized circuits: AI in TPUs There are circuits specifically designed for the workload, reducing the need for unnecessary calculations.

While CPUs are great for general tasks and GPUs are an excellent choice for gaming and AI, TPUs are specifically designed to make AI models work faster and more efficiently.